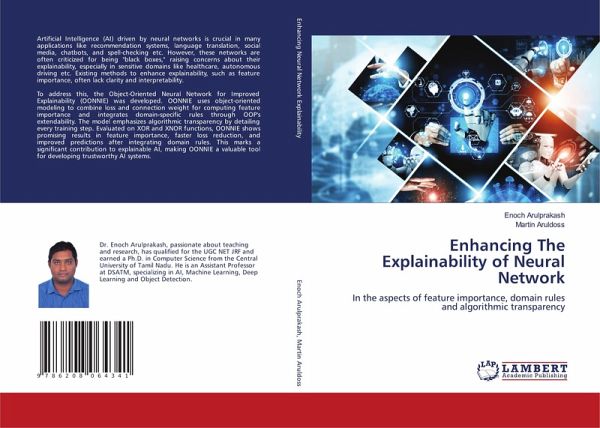

Enhancing The Explainability of Neural Network

In the aspects of feature importance, domain rules and algorithmic transparency

Versandkostenfrei!

Versandfertig in 6-10 Tagen

53,99 €

inkl. MwSt.

PAYBACK Punkte

27 °P sammeln!

Artificial Intelligence (AI) driven by neural networks is crucial in many applications like recommendation systems, language translation, social media, chatbots, and spell-checking etc. However, these networks are often criticized for being "black boxes," raising concerns about their explainability, especially in sensitive domains like healthcare, autonomous driving etc. Existing methods to enhance explainability, such as feature importance, often lack clarity and interpretability.To address this, the Object-Oriented Neural Network for Improved Explainability (OONNIE) was developed. OONNIE use...

Artificial Intelligence (AI) driven by neural networks is crucial in many applications like recommendation systems, language translation, social media, chatbots, and spell-checking etc. However, these networks are often criticized for being "black boxes," raising concerns about their explainability, especially in sensitive domains like healthcare, autonomous driving etc. Existing methods to enhance explainability, such as feature importance, often lack clarity and interpretability.To address this, the Object-Oriented Neural Network for Improved Explainability (OONNIE) was developed. OONNIE uses object-oriented modeling to combine loss and connection weight for computing feature importance and integrates domain-specific rules through OOP's extendability. The model emphasizes algorithmic transparency by detailing every training step. Evaluated on XOR and XNOR functions, OONNIE shows promising results in feature importance, faster loss reduction, and improved predictions after integrating domain rules. This marks a significant contribution to explainable AI, making OONNIE a valuable tool for developing trustworthy AI systems.