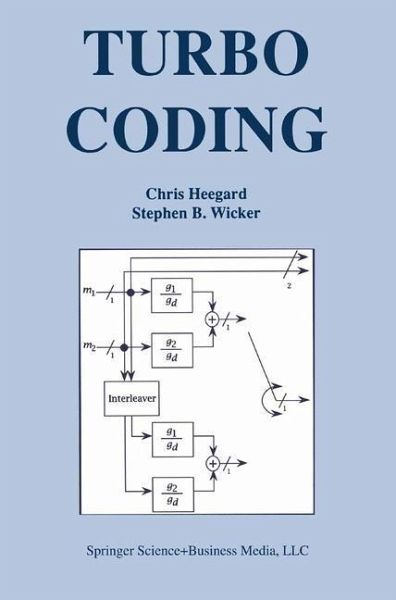

Turbo Coding

Versandkostenfrei!

Versandfertig in 6-10 Tagen

113,99 €

inkl. MwSt.

Weitere Ausgaben:

PAYBACK Punkte

57 °P sammeln!

Turbo Coding presents a unified view of the revolutionary field of turbo error control coding, summarizing recent results in the areas of encoder structure and performance analysis. The book also introduces new material, including a general theory for the analysis and design of interleavers, and a unified framework for the analysis and design of decoding algorithms.

Turbo Coding explains the basics of turbo error control coding in a straightforward manner, while making its potential impact on the design of digital communication systems as clear as possible. Chapters have been provided on the structure and performance of convolutional codes, interleaver design, and the structure and function of iterative decoders. The book also provides insight into the theory that underlies turbo error control, and briefly summarizes some of the ongoing research efforts. Recent efforts to develop a general theory that unites the Viterbi and BCJR algorithms are discussed in detail. A chapter is provided on the newly discovered connection between iterative decoding and belief propagation in graphs, showing that this leads to parallel algorithms that outperform currently used turbo decoding algorithms.

Turbo Coding is a primary resource for both researchers and teachers in the field of error control coding.

Turbo Coding explains the basics of turbo error control coding in a straightforward manner, while making its potential impact on the design of digital communication systems as clear as possible. Chapters have been provided on the structure and performance of convolutional codes, interleaver design, and the structure and function of iterative decoders. The book also provides insight into the theory that underlies turbo error control, and briefly summarizes some of the ongoing research efforts. Recent efforts to develop a general theory that unites the Viterbi and BCJR algorithms are discussed in detail. A chapter is provided on the newly discovered connection between iterative decoding and belief propagation in graphs, showing that this leads to parallel algorithms that outperform currently used turbo decoding algorithms.

Turbo Coding is a primary resource for both researchers and teachers in the field of error control coding.

When the 50th anniversary of the birth of Information Theory was celebrated at the 1998 IEEE International Symposium on Informa tion Theory in Boston, there was a great deal of reflection on the the year 1993 as a critical year. As the years pass and more perspec tive is gained, it is a fairly safe bet that we will view 1993 as the year when the "early years" of error control coding came to an end. This was the year in which Berrou, Glavieux and Thitimajshima pre sented "Near Shannon Limit Error-Correcting Coding and Decoding: Turbo Codes" at the International Conference on Communications in Geneva. In their presentation, Berrou et al. claimed that a combi nation of parallel concatenation and iterative decoding can provide reliable communications at a signal to noise ratio that is within a few tenths of a dB of the Shannon limit. Nearly fifty years of striving to achieve the promise of Shannon's noisy channel coding theorem had come to an end. The implications of this result wereimmediately apparent to all -coding gains on the order of 10 dB could be used to dramatically extend the range of communication receivers, increase data rates and services, or substantially reduce transmitter power levels. The 1993 ICC paper set in motion several research efforts that have permanently changed the way we look at error control coding.