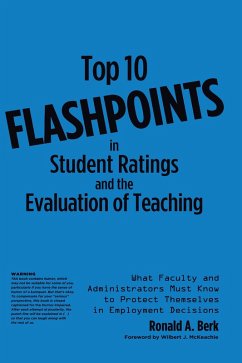

Thirteen Strategies to Measure College Teaching (eBook, ePUB)

A Consumer's Guide to Rating Scale Construction, Assessment, and Decision-Making for Faculty, Administrators, and Clinicians

PAYBACK Punkte

14 °P sammeln!

* Student evaluations of college teachers: perhaps the most contentious issue on campus* This book offers a more balanced approach* Evaluation affects pay, promotion and tenure, so of intense interest to all faculty* Major academic marketing and publicity* Combines original research with Berk's signature wacky humorTo many college professors the words "student evaluations" trigger mental images of the shower scene from Psycho, with those bloodcurdling screams. They're thinking: "Why not just whack me now, rather than wait to see those ratings again." This book takes off from the premise that s...

* Student evaluations of college teachers: perhaps the most contentious issue on campus* This book offers a more balanced approach* Evaluation affects pay, promotion and tenure, so of intense interest to all faculty* Major academic marketing and publicity* Combines original research with Berk's signature wacky humorTo many college professors the words "student evaluations" trigger mental images of the shower scene from Psycho, with those bloodcurdling screams. They're thinking: "Why not just whack me now, rather than wait to see those ratings again." This book takes off from the premise that student ratings are a necessary, but not sufficient source of evidence for measuring teaching effectiveness. It is a fun-filled--but solidly evidence-based--romp through more than a dozen other methods that include measurement by self, peers, outside experts, alumni, administrators, employers, and even aliens. As the major stakeholders in this process, both faculty AND administrators, plus clinicians who teach in schools of medicine, nursing, and the allied health fields, need to be involved in writing, adapting, evaluating, or buying items to create the various scales to measure teaching performance. This is the first basic introduction in the faculty evaluation literature to take you step-by-step through the process to develop these tools, interpret their scores, and make decisions about teaching improvement, annual contract renewal/dismissal, merit pay, promotion, and tenure. It explains how to create appropriate, high quality items and detect those that can introduce bias and unfairness into the results.Ron Berk also stresses the need for "triangulation"--the use of multiple, complementary methods--to provide the properly balanced, comprehensive and fair assessment of teaching that is the benchmark of employment decision making.This is a must-read to empower faculty, administrators, and clinicians to use appropriate evidence to make decisions accurately, reliably, and fairly. Don't trample each other in your stampede to snag a copy of this book!

Dieser Download kann aus rechtlichen Gründen nur mit Rechnungsadresse in A, B, BG, CY, CZ, D, DK, EW, E, FIN, F, GR, HR, H, IRL, I, LT, L, LR, M, NL, PL, P, R, S, SLO, SK ausgeliefert werden.